DynamoAI / GenAI Trust

Identifying the Explainability Gaps to Improve Trust on RAG Hallucination Detection

Product Context

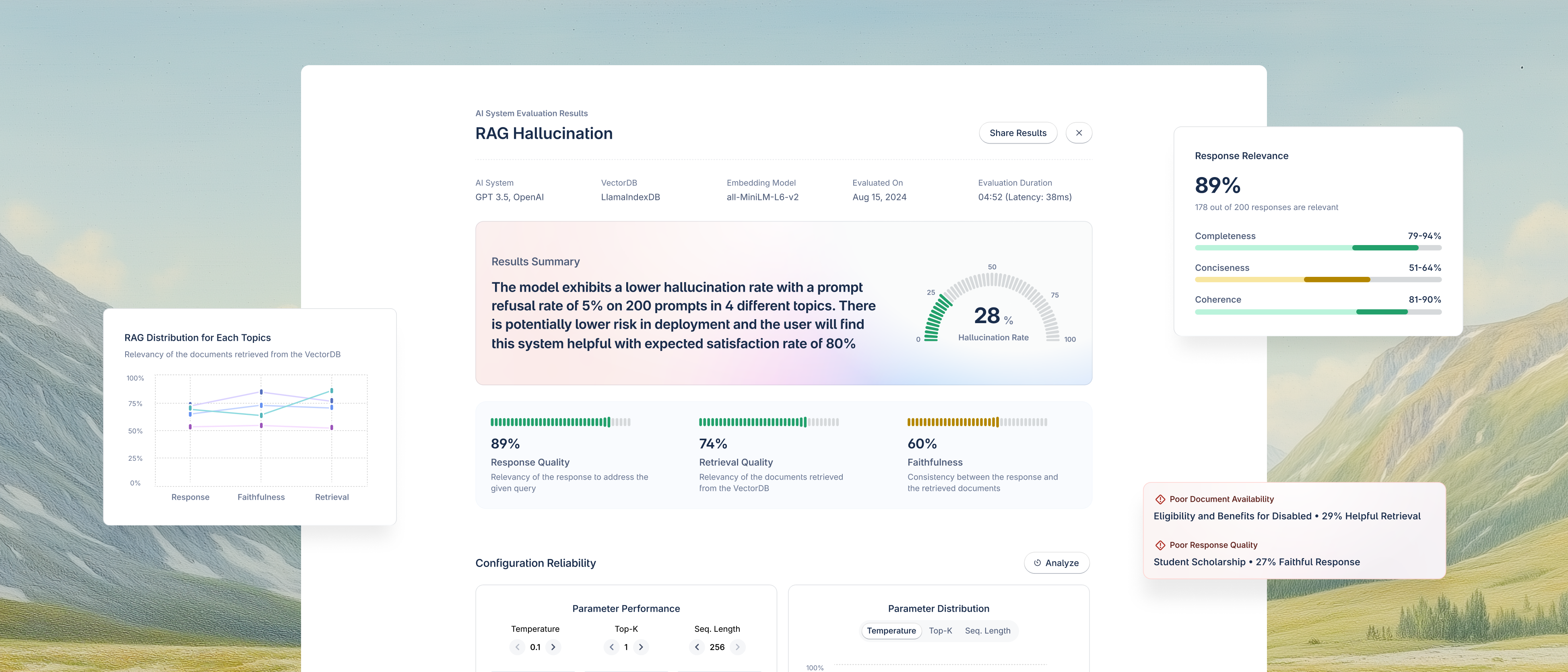

DynamoAI's RAG Hallucination Evaluation Tool helps enterprise ML engineers and product owners measure hallucination levels in their Retrieval-Augmented Generation (RAG) pipelines.

Business Problem

Post shipping the MVP of the feature, trial conversion rates were low. Customers lacked trust in the system's outputs, found the experience uninformative, and struggled to translate metrics into actionable next steps.

My Role

I was tasked with conducting a series of experiments to better understand the explainability of AI system hallucinations to empower ML Engineers and Product Owners with deeper insights into why hallucinations occur, enabling them to identify the root causes and implement effective solutions to improve their RAG system's reliability.

Checking access...