Platforms Experience / VR Haptics

SoundHapticVR: Exploring Multimodal Feedback in Virtual Reality

Context

In immersive VR environments, spatial sounds guide user behavior, attention, and interaction. However, users who are Deaf or Hard-of-Hearing often miss out on these spatial cues, limiting their ability to engage with VR content on equal terms. How can we enable DHH users to localize and identify sounds in VR environments without relying on vision or hearing?

Outcome

We built a head based haptic device that was attached to a VR Headset. With this portotype, we conducted three user studies with 25 Deaf and Hard-of-Hearing participants (ages 18–30), using a mix of psychophysical methods and interactive VR tasks to evaluate sensitivity, localization, and sound-type recognition. SoundHapticVR enabled DHH users to localize VR sounds with up to 89.9% accuracy using a head-worn 3-actuator haptic system. Users could also identify distinct sound types through tactile patterns alone, achieving an average recognition accuracy of 84%.

My Role

As this was part of my Master's Thesis, I led the research design, developed the VR prototype in Unity, and conducted user studies to evaluate the system’s effectiveness. This work was accepted as a full paper at ASSETS 2024 (≈25% acceptance rate) and published in the official conference proceedings.

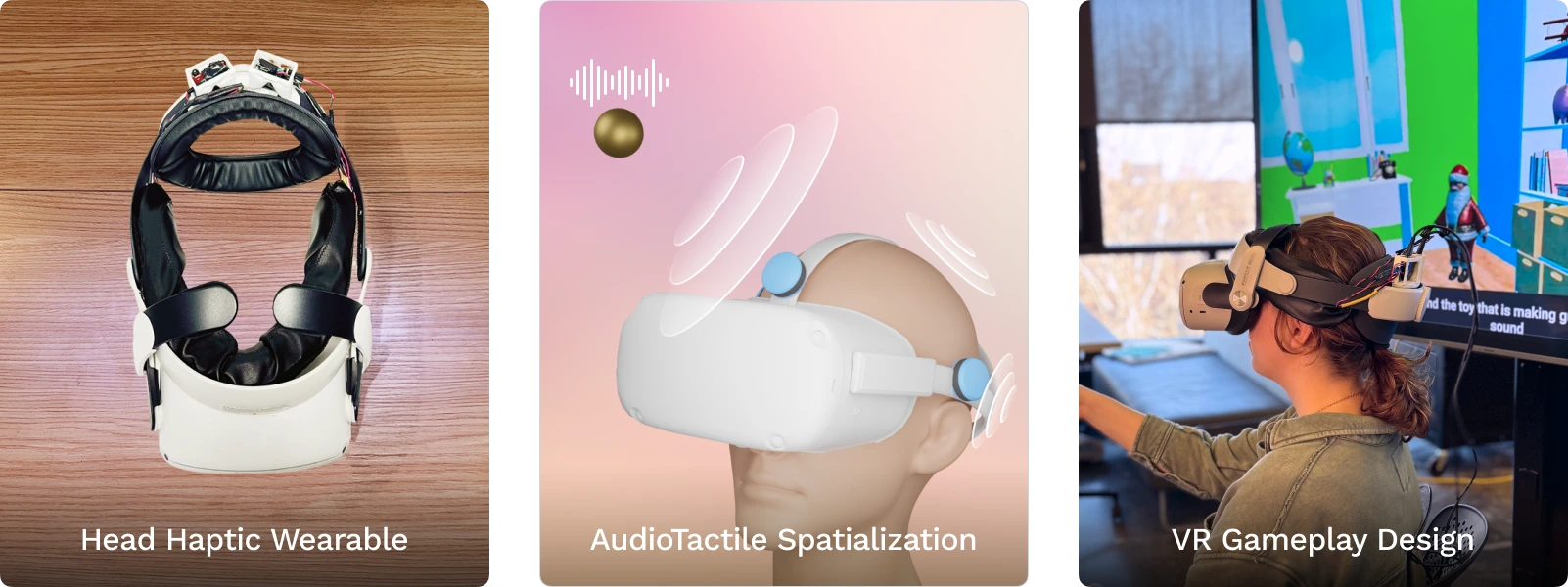

SoundHapticsVR has three key components. First is the Head-Haptic Wearable, a device designed to deliver precise spatialized haptic feedback on the head. Second is the Audio-Tactile Spatialization Algorithm, running on a local server, which translates audio cues into spatialized haptic signals. Finally, the VR gameplay environment, where Deaf and Hard-of-Hearing (DHH) users can immerse themselves in spatialized haptic feedback, enhancing their localization and navigation within the virtual world.

Component 1: Head-Haptic Wearable

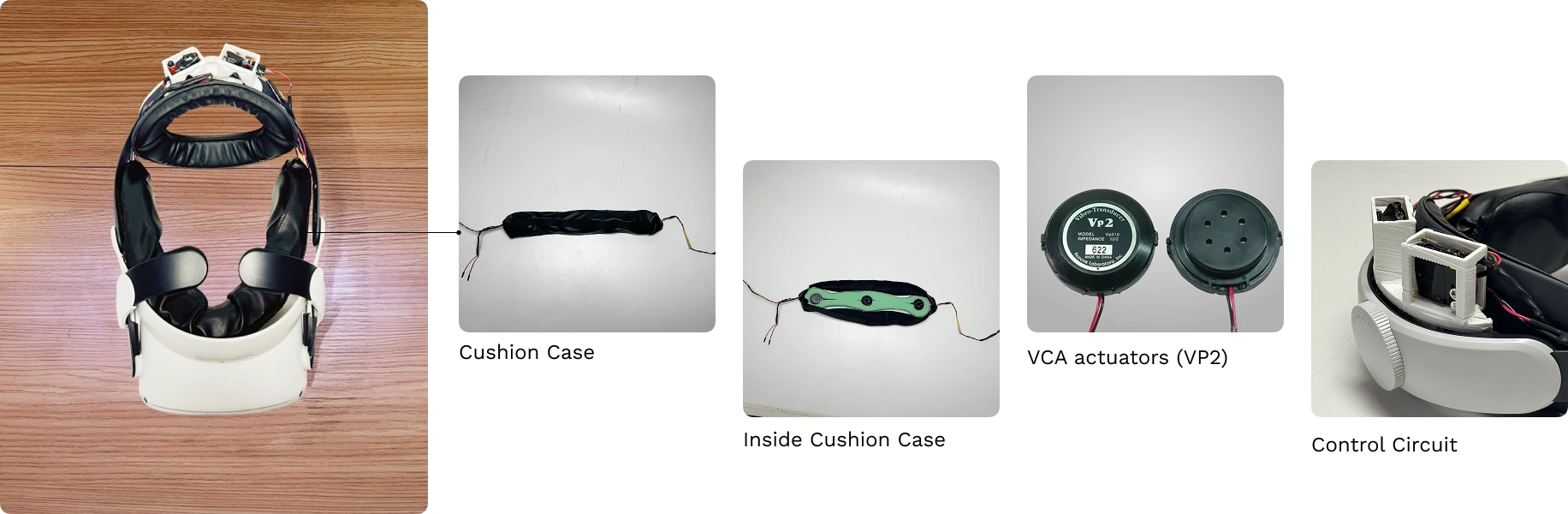

The hardware system consists of four voice coil actuators embedded into a soft, adjustable head strap, positioned at the left, right, front, and back of the head. It was designed for integration with the Meta Quest 2 headset, ensuring comfort, secure skin contact, and compatibility with immersive VR use.

These were some of our early explorations with the prototype, where we iterated to enhance stability, minimize vibration transmission across the headset, and refine the focus on tactile feedback to sharp and not blurred.

Component 2: Audio-Tactile Spatialization

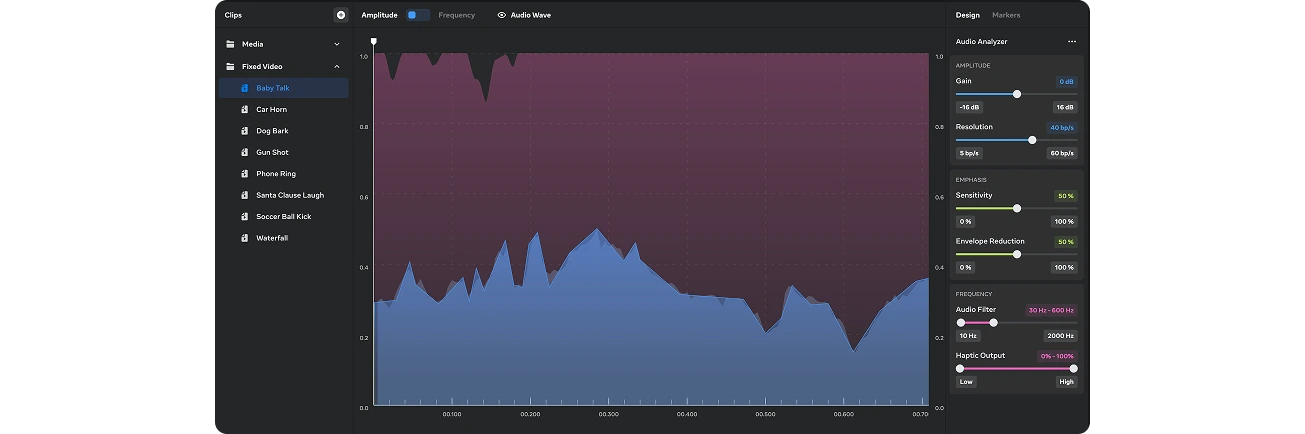

The second key component is the audio-tactile spatialization pipeline. We begin by converting the game’s audio files into corresponding tactile signals using the Meta Haptics Studio tool. These tactile files are then stored on a local server, ready to be retrieved based on sound source information received from the VR application.

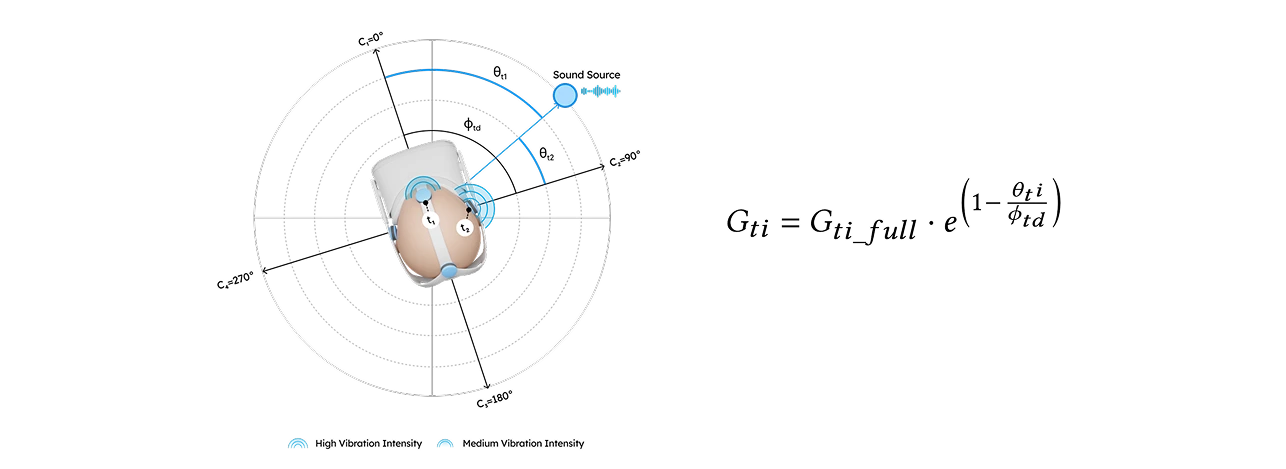

The next module in our audio-tactile spatialization pipeline is the tactile spatialization algorithm, which runs locally when the VR app is active. We developed a custom panning algorithm that spatializes sound across multiple channels instead of the traditional two-channel setup. This algorithm dynamically adjusts intensity on each actuator based on the relative angle between the user’s head and the sound source.

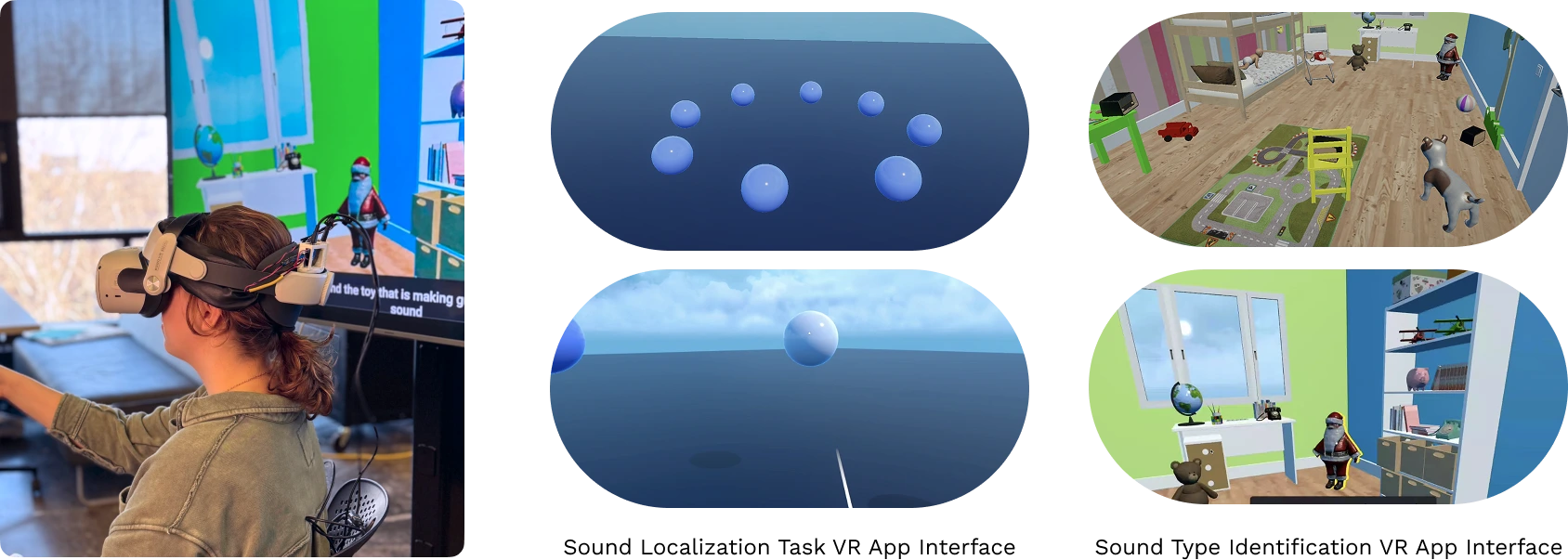

Component 3: VR Gameplay

The final component of our prototype is the VR gameplay, built in Unity. It communicates with the local server, sending head tracking and sound source information to spatialize tactile signals for accurate sound localization cues.

Vibrotactile Sensitivity

RQ1: How do tactile sensitivity thresholds for DHH users vary for different positions on the head with an attached VR headset?

Preferred Configuration

RQ2: What is the ideal configuration on the head for effective tactile-based localization of sounds in VR?

Different Sound Types

RQ3: How well does the system convey wide range of sound information through our audio-tactile transducers?

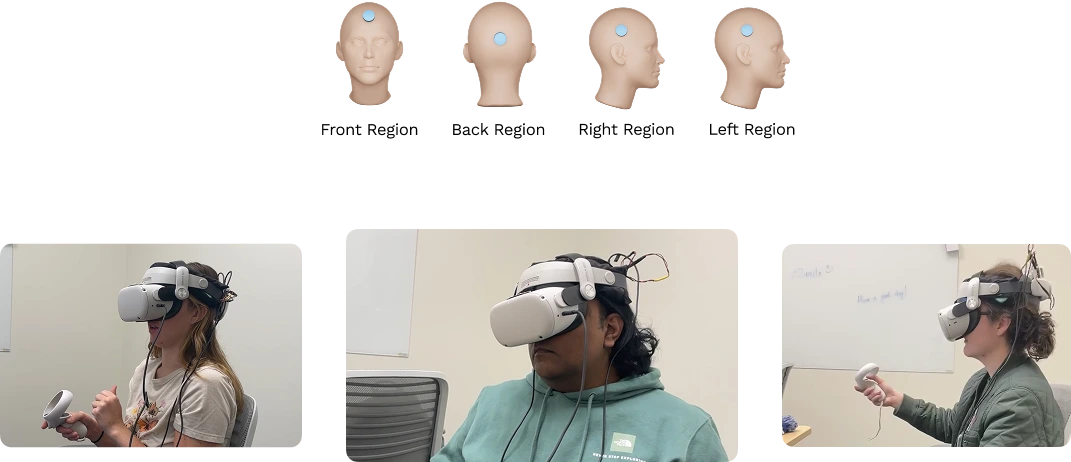

Vibrotactile Sensitivity Threshold Estimation

In this study, we aimed to identify the tactile frequency threshold across different regions of the head. We selected 4 regions shown in the below image based on prior research by Myles and his team on “Vibrotactile Sensitivity on the Head.” Twelve Deaf and Hard-of-Hearing participants took part in this study to help us gather frequency threshold data through a method of limits psychophysical study.

Outcomes

- Variation Across Regions: Tactile perception thresholds varied significantly. The front region had the highest frequency threshold (720.14 Hz).

- Lower Sensitivity at the Back: The back region had the lowest frequency threshold (516.9 Hz), likely due to hair and less direct skin contact.

- Calibration for Consistency: These variations necessitate consistent frequency equalization across regions for effective haptic feedback.

We selected the lowest frequency threshold among the four positions (approximately 516 Hz) for our subsequent studies to be the maximum perceivable frequency threshold for any tactile signal we use.

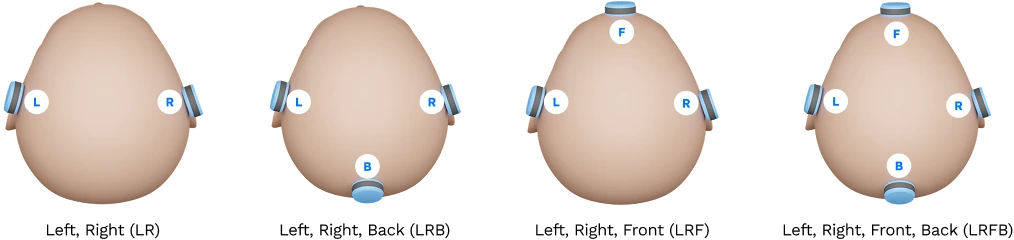

Identifying Preferred Configuration

With the frequency range established, we now want to identify the optimal configuration for our four-actuator setup. Specifically, we aim to determine how many actuators are necessary for precise sound localization. Our hypothesis is that the more actuators we use, the better the cueing will be, so we expect the four-actuator setup to outperform others. The configurations that were tested are left, right ; left, right, back; left ,right and front ; left right front and back.

Outcomes

- Highest Accuracy with Left Right Front Configuration: LRF configuration had the highest localization accuracy at 89.91%.

- Improved Localization with Front Transducer: Adding a front transducer significantly improved accuracy and provided better confirmation cues.

- Challenges with Back Transducer: The LR setup had the lowest accuracy and longer task completion time, with frequent front-back sound confusion.

LRF achieved the highest accuracy and user preference due to effective directional confirmation cues and reduced cognitive load, leading to the selection of LRF for the next study.

Localized Audio-Tactile Pattern Mapping

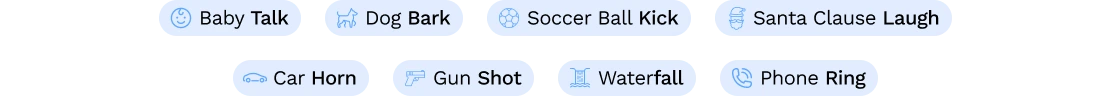

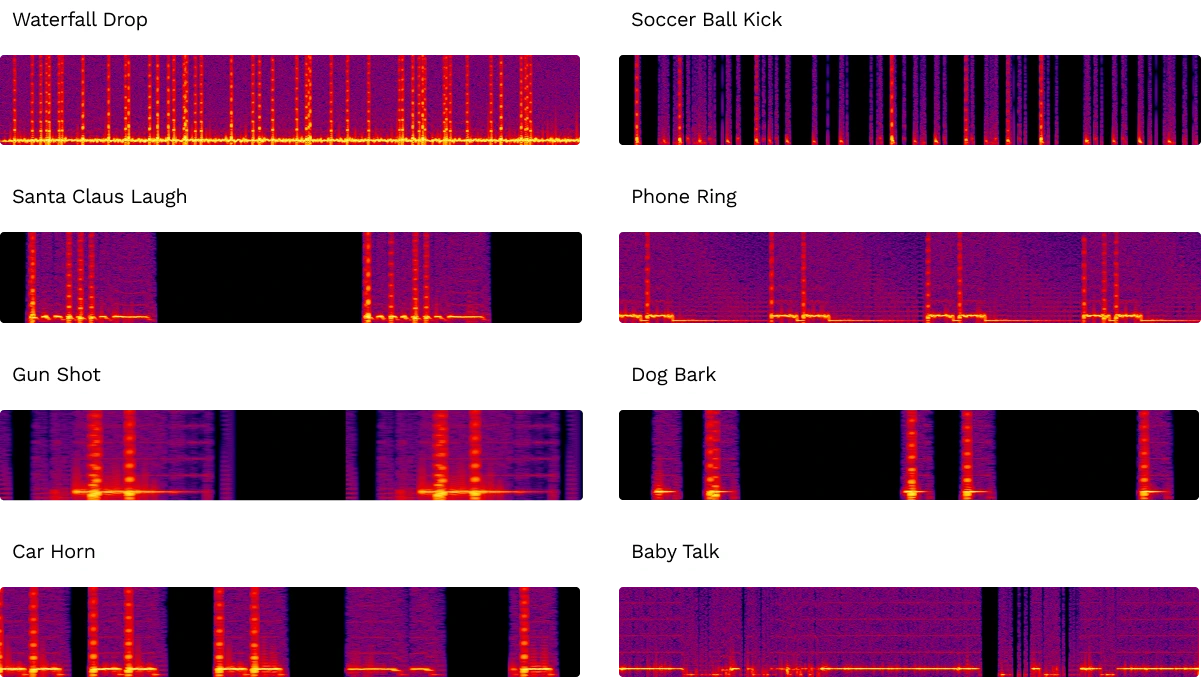

With the identified frequency cutoff for the actuators and the optimal three-channel arrangement for precise localization, we now aim to assess whether this configuration can help differentiate various sound types through spatialized tactile feedback. We selected eight sounds representing different types and intents, as outlined by Dhruv Jain in his paper, "Taxonomy of Sound," which was used in our previous study "SoundViz VR." as well.

Below is the spectrogram of the eight sounds we selected.

The user was asked to identify the sound source of the eight sounds in a VR kids room used in study 3.

Key Features

- Enhanced Accuracy with Distinct Patterns: High accuracy for distinct haptic patterns (e.g., Waterfall vs Gunshot).

- Challenges with Similar Patterns: Difficulty distinguishing similar patterns (e.g., Car Horn, Dog Bark), especially when close together.

- Recognition Based on Familiarity: Easier recognition of patterns resembling real-life vibrations, like a ringing phone

Position-Based Sensitivity in Tactile Frequency

Each head position has different sensitivities for DHHs user with VR headset recommending the need for customized threshold estimations for any future head based audio-tactile studies.

Enhancing the precision with front actuators

Using a three-transducer configuration (Left, Right, Front) enhances sound localization accuracy for DHH users in VR, effectively confirming cues without sensory overload.

Audio-Tactile for Different Sound Types

Study 3 showed that an audio-tactile transducer enables DHH users to identify distinct sound types through haptic patterns, promising to expand accessibility for wide range of sound types for DHH.